The Right Tool for the Job, Abi Reynolds

I’m sharing notes from UX Scotland 2014, which took place in Edinburgh Thursday and Friday this week.

Abi Reynolds (Irish, but “go England as I got them in the workplace sweepstakes!”) presented to a packed room on design methodologies, methods, and case studies based on her experience at Irish/UK betting company Paddy Power.

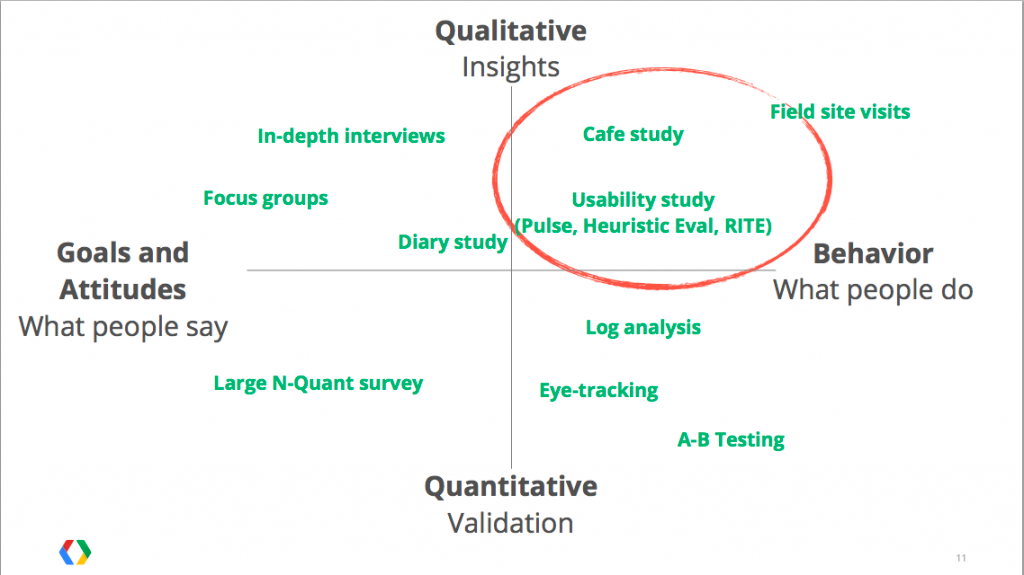

She explained that methodologies are the ‘why’ to the ‘what’ of methods. The Google Agile Research for Android is a good reference for the angle of your research. Generally, use quantitative methods to test assumptions and qualitative methods to find out what you don’t know.

Based on examples in Paddy Power, she talked more in-depth about ethnographic research, internal UX, and research panels.

Ethnographic Research

The ethnographic research was similar to that of Chris Clarke’s at Guardian Football in that they tracking people across the course of their betting week. For Paddy Power, this was the small but significant segment that are regular betters (and are far more valuable in terms of revenue for the company) and how they relate to betting, the Paddy Power brand, and why they might switch or stay. “Getting 1% of people loyal to another brand to switch to Paddy Power is more important than at than catering to casual users”.

She used Ethos to get t22 participants to uploads mobile stories over the course of a week.I was interested in the specifics of the recruiting and incentive process. In general she recommended outsourcing recruiting wherever possible (seconded). When it came to incentives, she staggered the rewards: £40 for showing up, £100 for doing the week long study, and a £50 extra for the best (“most meaningful, not most frequent”) entries: “they did the odds, 22 people £50 prize, and quickly realised there was a good chance they’d get it”. As it turned out, the feedback was so good—”I should have guessed that betters get really emotional about football”—that they gave several participants the bonus rather than just the one.

She also pointed out that qualitative studies like these potentially general huge amounts of data, so it’s worth knowing upfront what you want to do with it: will you make a day in the life? Recommendations? Another option is to use the data to run a workshop with stakeholders.

Internal UX

Internal UX involved testing potential changes to the site. Their two offices had slightly different setups (video link vs good-old one-way mirror), but both allowed a third party of observers to watch testing in another room as it happened and even asked questions. Reynolds had actually found ” the important thing was designing what happens in the other room” such as other teams understanding the potential benefits of seeing user testing. Testing in the rather grand Paddy Power Buildings may have also slightly cowed some participants, but she felt that good screening from recruiters as well as lying and saying the testers weren’t involved in the design at also helped.

Research panels

Finally, Reynolds had had a not-entirely-successful-but-still-interesting foray into research panels. Attempting to set up a study on a sub brand, they got some feedback but they didn’t have the numbers of members to enact much meaningful research.

Her feedback on it was :

- Allow enough time: they had only 3 months to collect feedback, which wasn’t enough. She’d recommend a minimum 6 month timeframe.

- Ensure you’ve got a large enough pool of customers. As this is passive it needs a large pool to make it work (akin to the 90/10/1 ratio, maybe?)

- …but make sure you can target loyal customers. Aside from the money incentive the people they got wanted to have a voice and give something back to the brand. Too high an incentive and you’ll get people just doing it for the money. (I’ve heard usability agency Optimal Usability say similar thing about survey incentives).

Finally, it was worth investing the tools that Reynolds uses. She uses Ethos (as mentioned above), miituu lite, What Users Do and Five Second Test amongst others (as well as recommending Stephen Anderson’s ‘Designing Seductive Interactions‘ as a good primer for changes). In response to an audience questions about collating qualitative and quantitative data, Alberta Soranzo suggested Dedoose as a good option.

EDIT 22/6: turns out I took some numbers on better ratios Abi said literally when it was more a general statement. This has been amended to be more of an analogy than cold hard statistics. Fact-check fail.

Member discussion