Being a responsible Service Standard assessor

I've had the privilege and responsibility of assessing the design of UK government services since 2017. I previously wrote about the history of the Service Standard.

In this post I'm writing about being a responsible Service Standard assessor, based on my experience as a design assessor.

Service Standard assessors

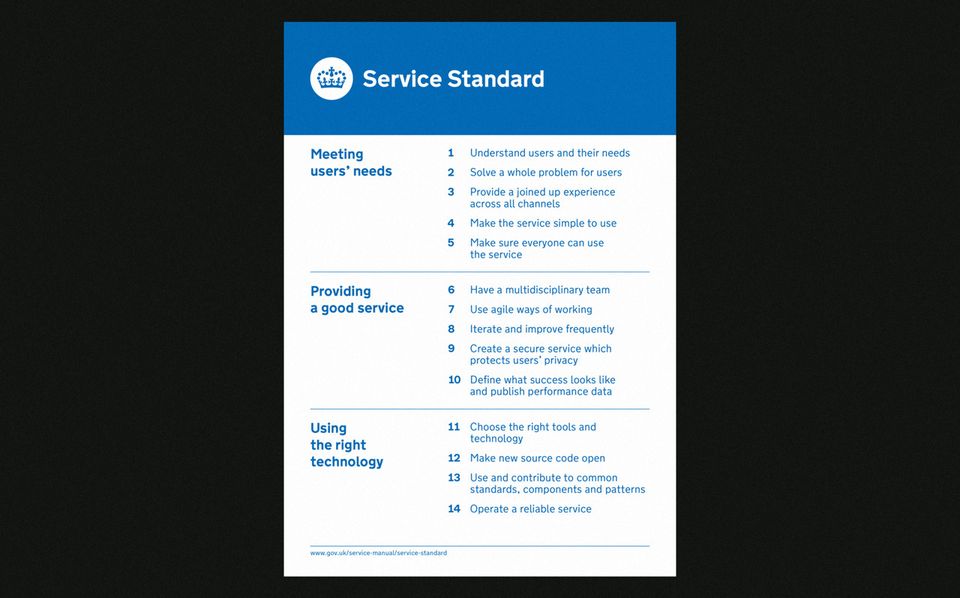

Service Standard assessments happen as a form of 'stage gate' before a built digital service can go to the next stage. A panel of 4 or 5 experts (product or delivery management, user research, design, technology, and sometimes performance analysis) spend 4 hours with the team to assess the service against the Service Standard. They then write a report which is usually published on GOV.UK. There's more on the process on GOV.UK.

Different assessors lead on different points of the standard. Design assessors lead on three points (2, 3, 4) and contribute to five others (1, 8, 13, 14).

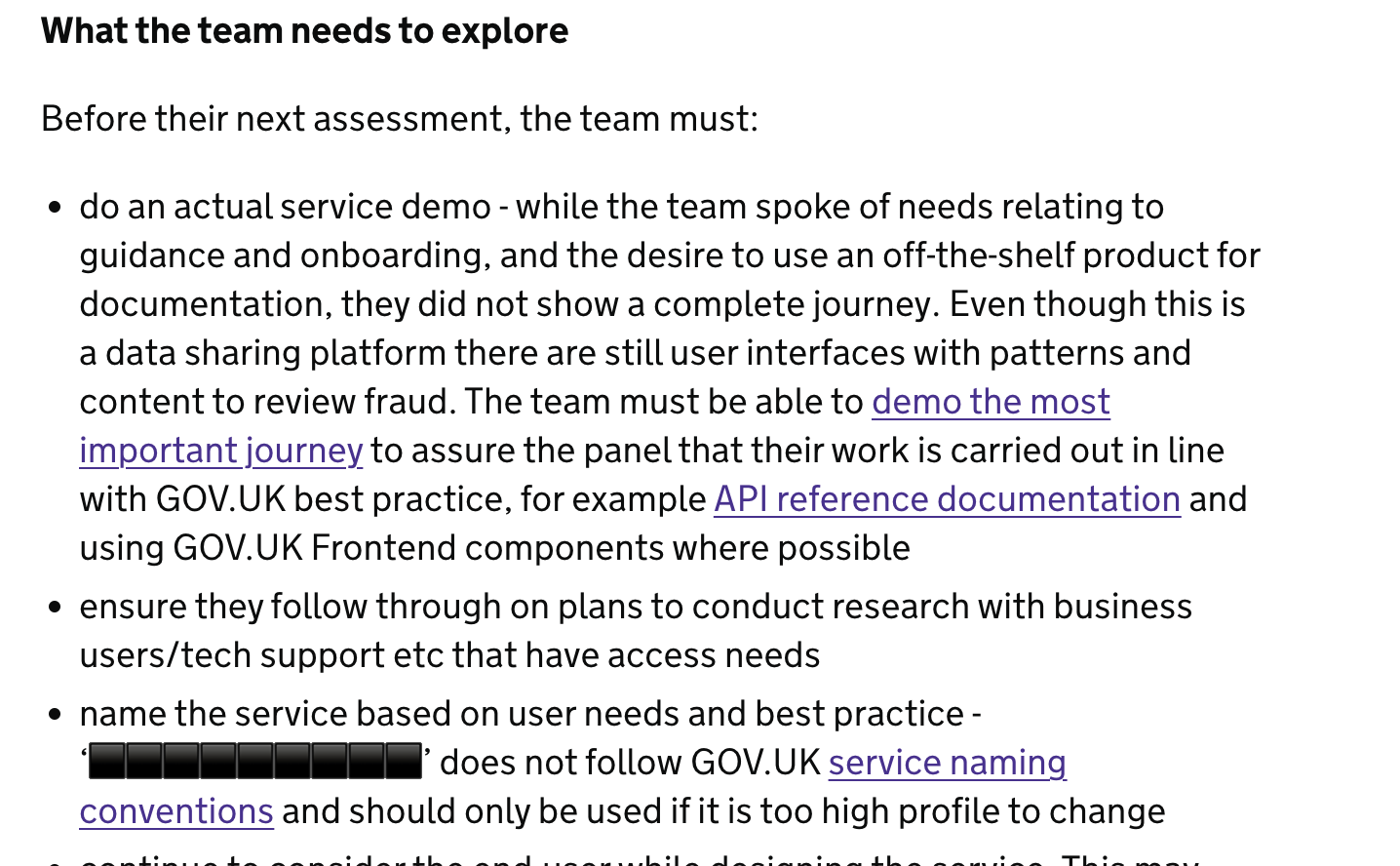

I've written a lot of Services Standard reports since 2017. The screenshots that follow are all recommendations from published Service Standard reports. I've removed information that relates to the specific team and department as that's not relevant for this discussion.

Assessments

Do your homework (if possible)

Assessors are normally sent a briefing pack from the team a week before the assessment. The pack includes some background information and ideally a prototype or link to the live service. I like to go through the information, test the service out and try to make sense of it enough to identify any concerns that I have. I then use the assessor pre-meet (which usually happens the day before the assessment) to discuss these concerns. This helps focus my questioning, especially if earlier sections of the day run over making me have less time.

That said, it's not always possible: I've sometimes had to sub in with one or two days' notice! At that, I'd usually at least try and look at the briefing pack and prototype or live service just before the assessment.

Let people have their moment while still managing time

I know that a lot of assessors groan when a team says "we've got a slide deck", especially if it takes a long time to get to the actual demo (which, as I wrote about in a different blog post, can make an assessment a lot easier if done well). However, as someone who has been service assessed too, I know how demoralising and even enraging it is to not be given the chance to show one's work. It's made me fight to let teams always start without interruption for each section and at least show some things before moving into questions.

My way of balancing the confidence of the team and time in my 30 minute section is to tell the team that they have 15 minutes for anything they want to show me before I use the remaining time for questions. If I'm really short on time and the team has been kind enough to send through their slide deck beforehand, I may summarise what's in the deck to acknowledge it, and then move on to questions.

Decisions and reports

Ask the panel "is it helpful to make the team come back for a reassessment"?

As much as I'd like all services that I assess to be perfect, that's not always the case. However, some recommendations are more important than others. I often use the question "is it helpful to make the team come back for a re-assessment?" as a lens to check whether borderline concerns meant that a point was met or not met.

This also worked in line with what they'd be done next:

- Alpha: "are we comfortable with them getting a full development team?"

- Beta: "are we comfortable with them having not controls on use?"

- Live: "are we comfortable with them not having a full development team any more?"

If we believed the the team could complete a recommendation without another assessment, we'd make the section met with what we described as a 'strong recommendation' through language such as "this must be done before continuing to public beta".

Write for your future assessor

Even if the assessment is met, there are often important recommendations to consider, for example things that the team needs to do in the next phase. Writing these in enough detail for someone else to pick up is really helpful later. (Unfortunately I have done re-assessments where I've looked at the previous recommendations from a different assessor and thought "without being there, I can't quite understand the context of the recommendation".)

Write for the team

For several years I did internal assessments before moving to cross-government ones. Internal assessments were done by a department for its own services when they had either less than 100,000 transactions or were only for their own staff rather than the public.

I'm glad that I started by writing internally. Thinking about how the feedback would be for people that I'd be seeing every day reminded me to be thoughtful and fair.

Use the Service Manual

When I was at DWP, I had the luck to be on a project where I got to map the Services Manual against phases and roles (Mural template here). Since then, I’ve made a point to try and link to sections when giving feedback - I’ve found it helpful as it saves words and is also a good guardrail to mean that I’m giving information based on evidence rather than opinion.

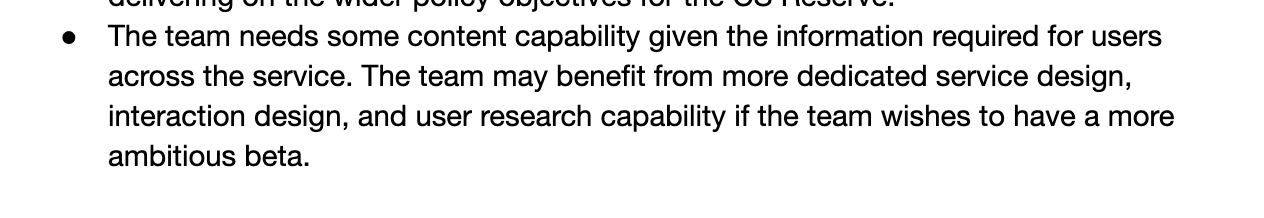

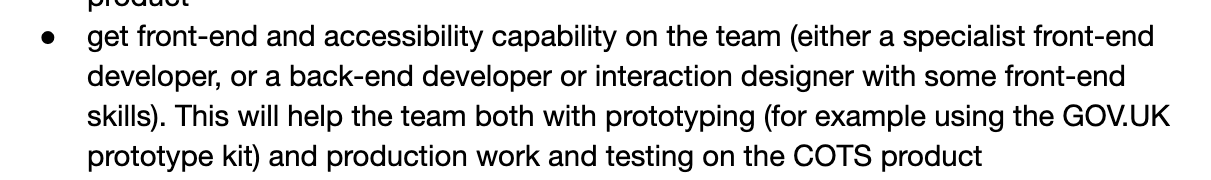

Capability, not roles

Something I learned from assessing smaller teams, particularly arms-length bodies, was that much as they’d like a full set of specialist roles, thanks to their budgetary constraints, often it just wasn't practical.

Since then, I’ve done a lot in my reports to talk about capability rather than roles, and sometimes prioritise which is more important to get.

![make sure they have the extra user-centred design capability they have specified for beta, in particular technical writing (link) - this is distinct from content design. The team may also need ad-hoc graphic design capability for signage recommendations if this is not available through the [department name].](https://www.vickyteinaki.com/content/images/2022/12/image-10.png)

Help teams 'look sideways'

Aside from the Service Manual, I try to point teams towards resources that can help them. I'm lucky from my time in government to know about a lot of things available such as the Design System catchups and 'Get Feedback' sessions, but have also sometimes done digging around my contacts to see if there are teams doing similar work who I can point teams to.

![The virtual 'get feedback sessions [link] for government designers may be helpful if the team wants advice](https://www.vickyteinaki.com/content/images/2022/12/image-7.png)

![look to other departments doing similar things, both within [department] and beyond. there is work happening in parallel for [this type of] data, HMRC created a developer hub which met the Service Standard, and the NHS also has a well-resolved developer ecosystem. The Data Standards Authority's API and Data Exchange community [link] is open to all in the public sector and may help the team learn from similar services](https://www.vickyteinaki.com/content/images/2022/12/image-6.png)

Outcomes, not solutions

For everything apart from implementation of GOV.UK Design System patterns (there is text about how to use accordions and select boxes!), I’m conscious of separating out my ideas from the real thing to do. This is partly from my own experience in a service team of being given recommendations that didn’t fit the evidence, and partly to keep the power with the team so that they can try and prove me wrong. I’m a big fan of the phrase “for example” to separate my suggestion from what the team really needs to test.

![Explore and test more scenario-based ways for users to find the form, and start from existing entry points before proposing new start pages. For example, the team could try filtering user from the existing [service] start page. This could both help users find the right form without learning technical language, and also work towards the strategic vision of all forms being available online in a single place.](https://www.vickyteinaki.com/content/images/2022/12/image-5.png)

Be mindful of constraints

I'm particularly careful when it comes to recommendations for things that the team isn't building, for example anything involving communications.

I learned this from having to pick up am internal reassessment from another design assessor. That assessor had told the team to “go wild” on the formates of letters being sent to users, so in the reassessment the team duly presented a range of idiosyncratic designs. After some quick Slack messages with other people in that programme, it turned out that the designed letters had been done with no insight into the current printing process, which was done with an external provider with print runs of at least 500 every 6 months. I had to have an awkward conversation, as they'd done what they'd been told to do… but what they'd been told to do had ignored reality.

![complete their planned work on communications and improving other offline materials - working with [department name] comms will make sure that anything the team proposes meets department guidelines (for example, the layout of print documents and any printing constraints should [department name] be required to do print runs)](https://www.vickyteinaki.com/content/images/2022/12/image-4.png)

![understand the constraints for GOV.UK templates when suggesting out-of-service changes.The team has identified useful information needed before signing in, for example the paper form for offline use. However start pages cannot be customised [link]. The team may therefore be designing and testing options that are impossible to release. [department name]'s GOV.UK content designers and the cross-government content community can assist in understanding the constraints.](https://www.vickyteinaki.com/content/images/2022/12/image-3.png)

Do the hard work to make recommendations simple (especially if it's "not met")

If a team hasn't met a point, I'm careful not to overplay it. I feel that getting 'not met' as an outcome is bad enough, excessive information about it is enough to make the reader zone out.

Something that I consider is whether there's a root cause for the problem - for example confused journeys may be about not entirely understanding the whole problem, or that the team is really trying but there just aren't enough people to do all the work.

I also consider theming and moving feedback around. Sometimes this means checking with other assessors about reshaping the recommendations. For example, if I mention a particular theme in one point, I'll just refer to it "(as covered in point 6)" or so on. I do this to emphasise the importance of the recommendation without making it overwhelming.

I'll also choose to downplay or even not mention minor issues—if a service has major issues to fix, I believe that the minor issues can wait until the next assessment.

Final thoughts

Service assessments are useful but tough. Back in 2019 I made a bingo card gently poking fun at some of the issues (I probably need to do a remote-era one), but they are stressful events where often teams are trying their best but just don't know what good looks like.

With that in mind I'm watching with interest how other places such as Employment and Social Development Canada are looking to make the assessments feel less combative.

In the intervening time I'm heartened to see that there is some free self-directed training on Civil Service Learning about the Service Standard, and that there are rumours about upcoming formally accredited Service Assessor training.

Member discussion