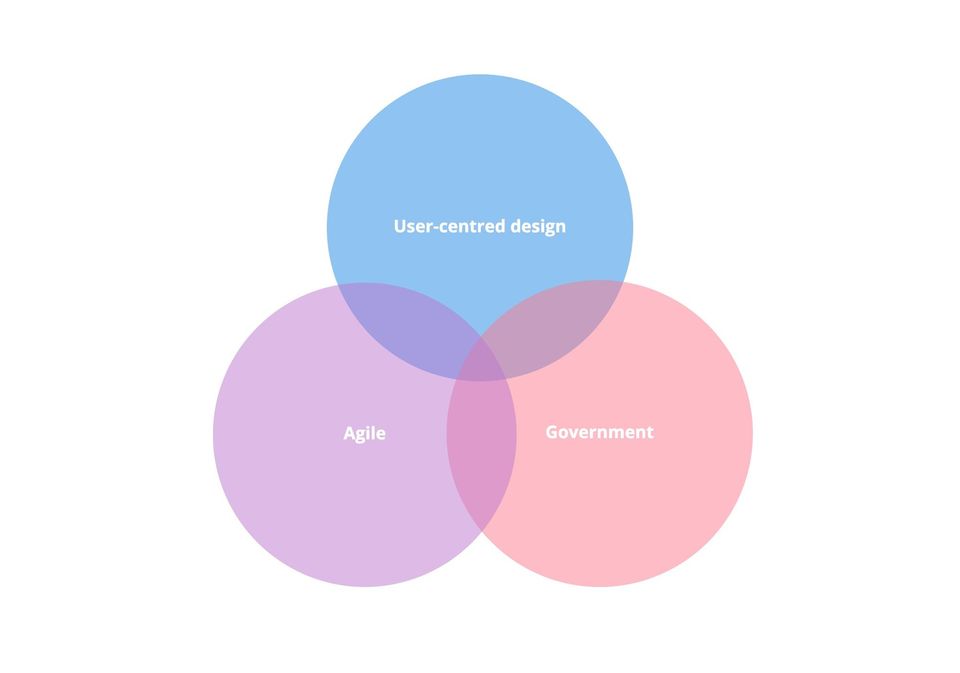

User-centred design, Agile, and government

I've noticed that some user-centred design (UCD) practitioners new to working in government struggle with agile ways of working. Agile is one of the big 'bets' that the Service Standard enforced with government technology projects, but its origins in manufacturing and software engineering means that it's not an immediate fit with high quality user-centred design work.

The Service Standard and its stage gate process does help make space for user-centred design, but also means an extra level of governance and considerations that aren't present in approaches such as Lean UX.

What do we mean by Agile?

Agile is a word that is often used without checking what it means. If we're to be precise, Agile is an approach based on a manifesto and 12 principles

The manifesto is:

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

A group of software engineers (white, male software engineers at a ski resort) created the manifesto in 2001. It was a response to the stage-gated approach known as 'waterfall'. In waterfall, the project moves from requirements to design to delivery. It had its value in hardware engineering (you want to get things right before investing millions in tooling) but less so with software.

There are 12 principles behind the agile manifesto. The 12 principles pretty much have something for everyone. There are some that get spoken about a lot, such as self-organising teams and delivering software frequently. However, one I think is often overlooked is the principle of maximising the amount of work not done. Agile isn't about solving everything in one go, but being intentional about what gets solved and when.

Agile methodologies and frameworks

In practice, you won’t just “do agile” - teams will use one or more methodologies and frameworks. Here are some common ones and what I'd describe as their key contributions to agile:

- Kanban: minimising work in progress

- Lean (manufacture and startup): minimising waste (or validating early) through feedback loops

- Scrum: releasing value in timeboxed increments

- Extreme Programming (XP): frequent code releases through continual communication ('pair programming') and testing

Agile and user-centred design

These frameworks started in either manufacturing (Kanban and Lean) or software engineering (Scrum and Extreme Programming). This means that they weren't set up with user-centred design practitioners (user researchers, interaction designers etc) in mind. However, it's possible to include this work in many of the agile methodologies. As far back in 2008, Autodesk was talking about using dual-tracks of discovery (user research and design) and delivery work within a single team.

Later Jeff Gothelf expanded this with the concept of 'lean UX'. This is a mindset that brings together UX (including design thinking) and Agile (including Lean Startup).

The Lean UX book (and Gothelf's Lean UX Canvas) are worth a look. There's also a great Boxes and Arrows blog post from 2013 about the UX perspective on Agile. However, for now, I'm going to focus on a Lean UX manifesto and how this related to Service Standard UCD work.

The Lean UX manifesto

A few years ago, some UX designers put together a Lean UX Manifesto. It didn't really pick up momentum at the time: I only discovered it writing this blog post. However, I think that they're a useful reference and are translatable to working to the Service Standard. They are as follows:

- Early customer validation over releasing products with unknown end-user value

- Collaborative design over designing on an island

- Solving user problems over designing the next “cool” feature

- Measuring KPIs over undefined success metrics

- Applying appropriate tools over following a rigid plan

- Nimble design over heavy wireframes, comps or specs

Early customer validation over releasing products with unknown end-user value

The Service Standard's focus on prototyping (screens, experiences, processes) and the discovery-alpha-beta-live cycle hews closely to this. In particular, it encourages validation in the alpha stage through testing concepts. There's good reason for this: there's an entire book about government big-bang launches that were 'blunders'. Course correcting or even stopping is important.

Part of the validation can be about being a being able to generate hypotheses. There's more on this in the Service Manual. Some other references include Ben Holliday's intro to hypothesis driven design and Becky Lee's description of doing this at DWP .

Something that I do remind some more junior UCD people is that validation doesn't always mean usability testing. Sometimes if the change seems low risk and easy to change (for example new information that means changing content on one page), it's often better to just 'ship and measure'. This particular phrase comes from Jeff Gothelf's excellent hypothesis prioritisation framework), but is also used in a more user research based version by Ananda Nayda. Harry Vos from GDS has a more rigorous version as well as instructions as how to run the mapping as a team activity.

Collaborative design over designing on an island

In an agile delivery team, the UCD folk are responsible for shaping relevant work to be done - but also calling on other people to give their expertise as required. I often see this interpreted as "design sprints all the time". This isn't the case. What it does mean is being transparent about upcoming work and current work and pulling in people to review and sense check. For example, asking for 30 minutes with a free developer may mean finding out that you can play back more information from another system than you thought.

An interesting distinction with the Service Standard is pushing where possible for multiple UCD people in a team with complementary specialisms. This is its own form of collaborative design. I love the content-interaction duo, as working as a pair usually makes the work better. Others agree: for example Emily and Colin from BPDTS (now DWP) and Rob and Sheida from the Ministry of Justice.

Solving user problems over designing the next “cool” feature

Something that we often have to look at with work is "is this really a tech problem or is it more about guidance?"

The focus on 'problems' is also helpful: a GDS poster that I really like is "find what works, not what's popular". Just because people may say that they want a cool looking account dashboard, doesn't mean that they actually need it.

More than anything, this can mean challenging constraints and "doing the hard work to make it simple". One of my favourite government blog posts is Peter Herlighy's about working closely with lawyers at GDS to make online voting better. I also regularly point people to Adele Murray's work at DWP to improve the letters for Get Your State Pension.

Measuring KPIs over undefined success metrics

While KPIs in the public sector are different from the private sector, they're still valuable. The Service Standard recommends 4 key ones but teams are expected to have other ones.

For UCD folk, focusing on measurement can mean being comfortable with quantitative testing methods (see Yvie Tracey's intro of doing digital experimentation at HMRC and case study of A/B testing the 'Working at Home' service). However, it's also about having a measurement approach: asking how something performs now and how we might improve it. It might mean working with others to be creative about measurements, for example by usability benchmarking.or mixing performance analytics with user research as Haur Kang and Louise Petrie did for GOV.UK.

Applying appropriate tools over following a rigid plan

One of the biggest learning curves that I've seen people new to this way of working in government deal with is the balance between overplanning and chaos.

From my experience in government, high quality usability testing (with people with a range of access needs and so on) does usually require some lead time. For example, for testing to happen in a particular one week:

- the usability testing script needs to be ready the day before if any team members are to review it

- the prototype needs to be ready at least the day before so that the user researcher can check that it works with their script and advise if anything doesn't work

- the usability test planning (possibly as a team as David Travis did at HMRC) needs to be done the week before at latest, to give enough time to do the prototype and testing script

- the recruitment brief needs to be written and sent three weeks before

- the team needs to decide who the users to test with three weeks before so as to inform the recruitment brief

I've seen this managed a few ways. One user researcher that I worked with had a stickie note matrix on a whiteboard with what, why, with whom, where, and how across the top, and dates as the columns going ahead maybe 3 months. This helped us focus and move things around. It's also possible to do this with tools like JIRA.

More than anything, this is about being able to change plans. For example, this could mean changing the focus of the next user research cycle if a design unexpectedly doesn't work (or works perfectly). It can even be about changing something mid-testing: for example, I've had some designs fail in front of 2 people and then use the lunch break to make changes.

Nimble design over heavy wireframes, comps or specs

The strong community around government design means that we have a lot of tools to be nimble. This can include:

- storyboards - like the fantastic and open source UX comics by Steve Cable

- using shorthand in documents to go to straight to development - as described by Andrew Duckworth and then used by Adam Silver at the Ministry of Justice

- flow diagrams - Paul Smith has captured many examples

- prototyping in code - as promoted by the GOV.UK prototype kit

- easily updated living documents for important details such as error messages (I wish that there were some examples of these in public but I can't find them!)

More generally, I've found that being nimble means focusing on one thing at a time and being OK with later rework. Rather than trying to design all the journeys, start with slices (for example, happy path or common path), design, test, then try to add on another journey. I've had to redesign some parts of journeys as we added complexity, but the shared knowledge meant we could redesign with confidence.

Member discussion